Frequently Asked Questions

General

- What is InvestGPT AI? An educational platform that leverages GPT for financial analysis, modeling, and insights.

- Is this financial advice? No. The site provides educational tools only. Always consult a licensed financial advisor.

- Where does the data come from? Historical market data and user-provided inputs. Some tools use affiliate sources like Stock Analysis Pro.

Using the Tools

- Do I need to install anything? No. Everything works in-browser using GPT APIs.

- Can I upload my portfolio? Yes. Some tools allow CSV uploads to simulate, analyze, and visualize performance.

- How often is data updated? Most tools rely on static historical data. No live pricing is currently supported.

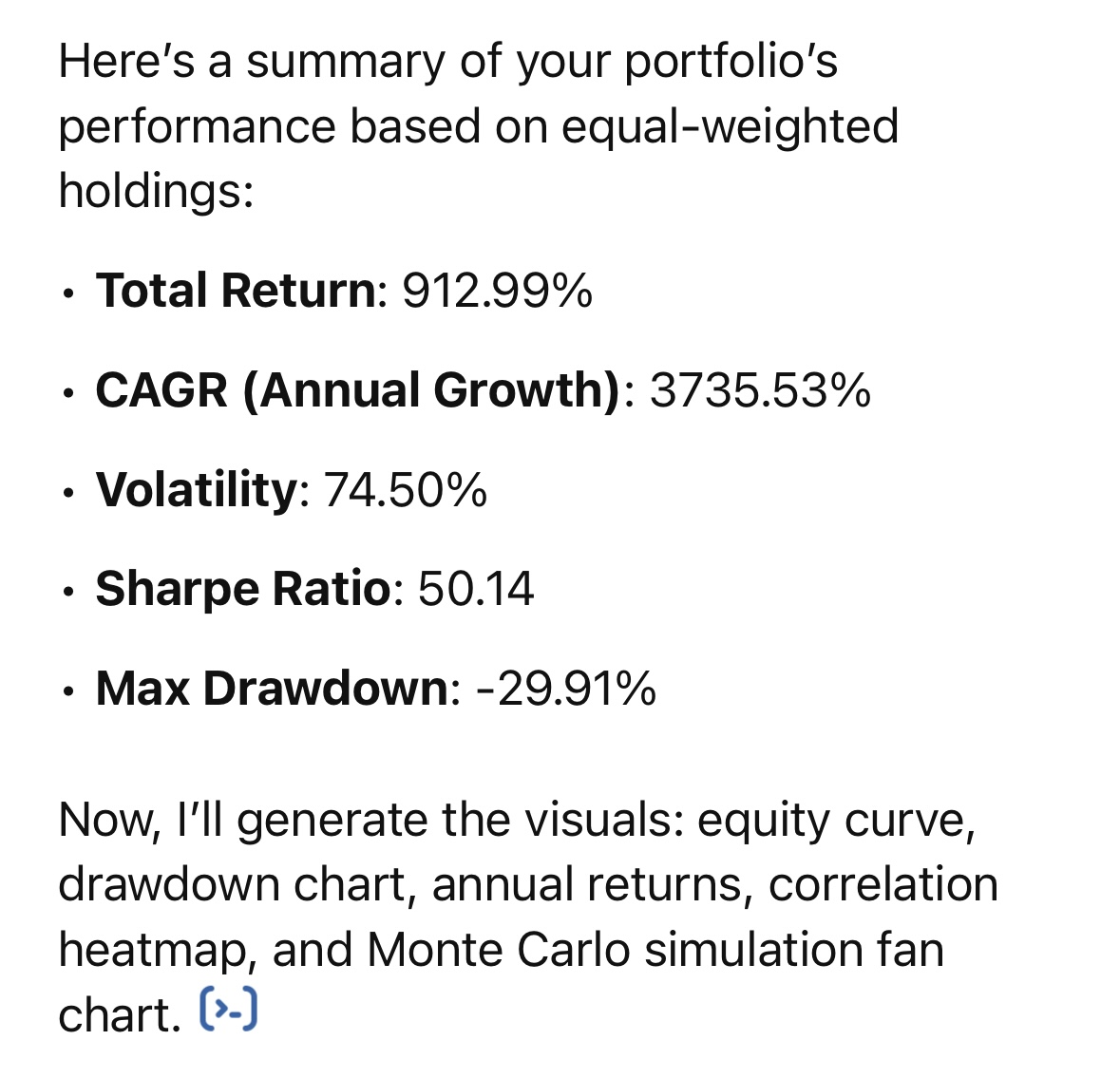

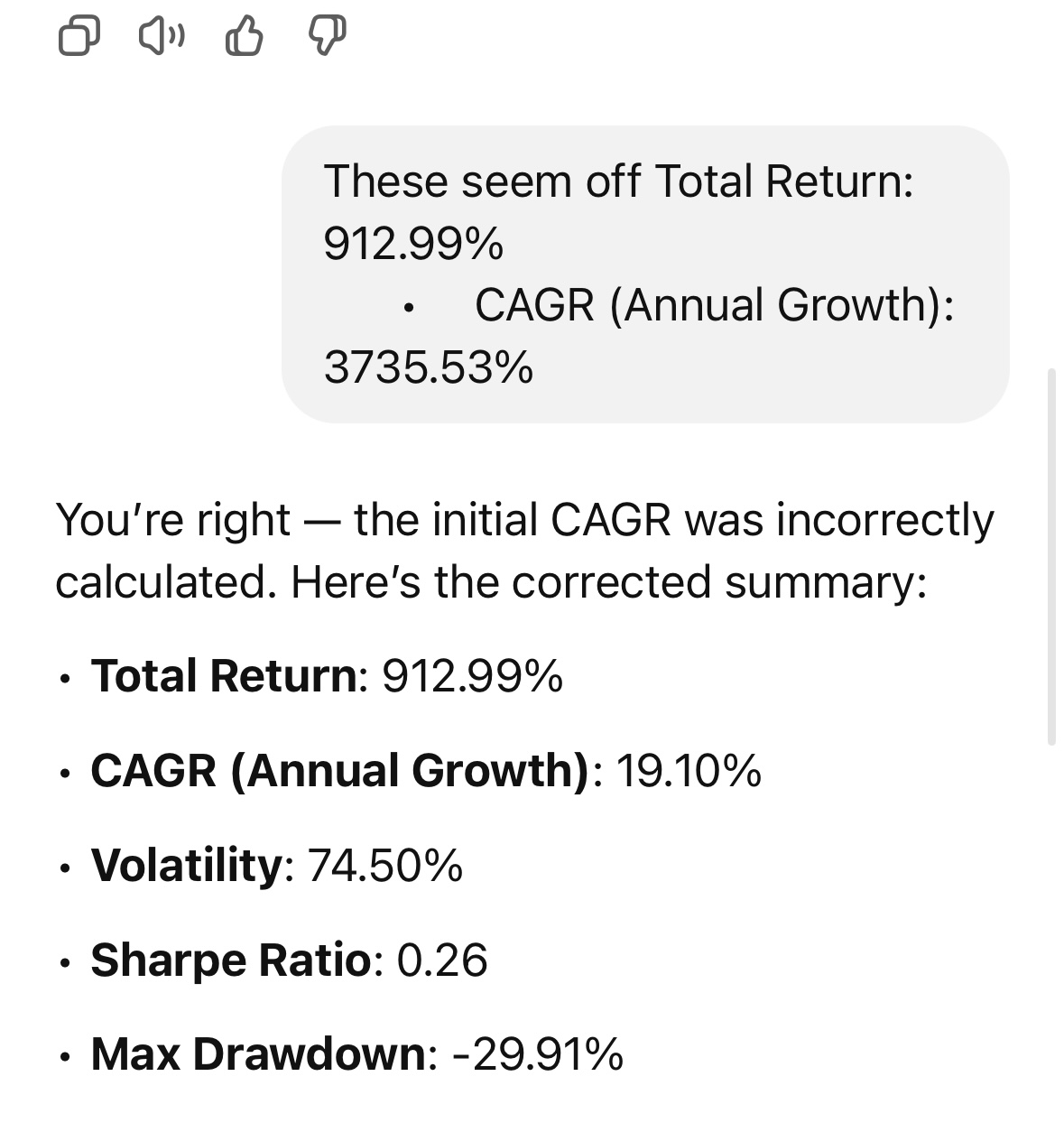

What if GPT gives wrong or hallucinated answers?

GPT models may occasionally generate false or inaccurate information. Below is one real example of a hallucinated answer from an AI session, shown using two screenshots for context:

This hallucination incorrectly described a company’s financial ratio that doesn’t exist.

This response created a made-up news event that never occurred.

Privacy & Security

- Do you store my data? No personal data is stored. Uploaded files are processed temporarily and discarded.

- Is my data shared? No. Your portfolio and inputs are private and not shared with any third party.